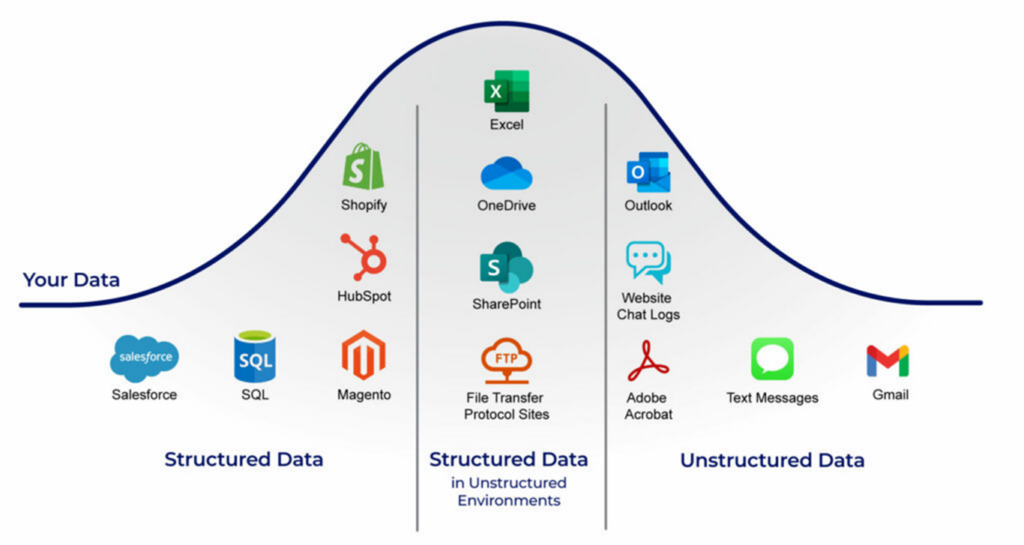

The mapping of personal data is the process of creating a link between personal data in one system or database and personal data in another system or database. This can be done to transfer personal data between systems or to integrate personal data from multiple sources into a single system.

Personal data mapping involves defining how personal data elements in one system relates to personal data elements in another system. It may also involve transforming or manipulating data in order to make it compatible with the target system. This process is important for data protection and privacy considerations, as it allows organisations to ensure that personal data is handled in a secure and compliant manner.

A working example of data mapping

Imagine a retail company that wants to integrate data from their online and in-store sales systems to have a unified view of their customers’ purchases.

This company would analyse the data in the online sales system and the in-store sales system to understand the structure, format, and data. Then identify any data quality issues or inconsistencies that need to be addressed during the mapping process. The company then creates a mapping design that defines how data elements in the online sales system correspond to data elements in the in-store sales system. For example, the customer’s name in the online system should match with the customer’s name in the in-store system.

The company transforms the data from both systems to make it compatible with a new target system. This process may involve converting data from one format to another, or applying data validation or cleansing rules. They load the transformed data into the target system. This involves using data integration tools, such as ETL software, to automate the process of loading the data.

The company then verifies the quality of the mapped data in the target system. It compares the source and target systems, or runs data quality checks and validation rules to ensure that the data is accurate and complete. By completing these steps, the company creates a single, unified view of their customers’ purchases. It allows them to better understand their customers and make more informed business decisions.

Common data mapping techniques

Manual Data Mapping creates a correspondence between data elements in one system and data elements in another system. Spreadsheet software, such as Microsoft Excel, create a mapping document that defines the relationships between the data elements. Automatic mapping uses software to automatically create a correspondence between data elements in one system and data elements in another system. Data integration tools such as Extract, Transform, Load (ETL) software, automatically map data elements based on predefined rules or algorithms.

Transformation mapping transforms data from one format or structure to another to make it compatible with the target system. It uses tools such as XSLT or JSON transformers. Object-Relational mapping (ORM) maps between objects in a programming language and the relational model of a database. ORM tools such as Hibernate and Entity Framework automate the process of mapping between these two models.

Data virtualisation creates a virtual data layer that abstracts the underlying data sources and presents a unified view of the data to the target system. Data virtualisation tools, such as Denodo or Informatica, create this virtual data layer and automates the process. Choosing the right technique will depend on the specific use case, and the complexity of the process.

The process explained

The mapping process usually involves the following process.

Analyse the data in both the source and target systems to understand the structure, format, and data content. Then you need to identify any data quality issues or inconsistencies that will need to be addressed during the mapping process. You should create a data mapping design that defines how data elements in the source system correspond to data elements in the target system. This includes a detailed description of the data elements, their relationships, and any data transformations that will be required.

The next part of the process involves transforming the data to make it compatible with the target system. This may involve converting data between formats and applying data validation or cleansing rules. The transformed data should be loaded into the target system. This may involve using data integration tools, such as ETL software, to automate the process of loading the data.

Once the data is transformed you need to verify the quality of the mapped data in the target system. This may include comparing the data to the source system, or running data quality checks and validation rules to ensure that the data is accurate and complete. This process can be iterative, and some steps may need to be repeated in case of issues or errors.

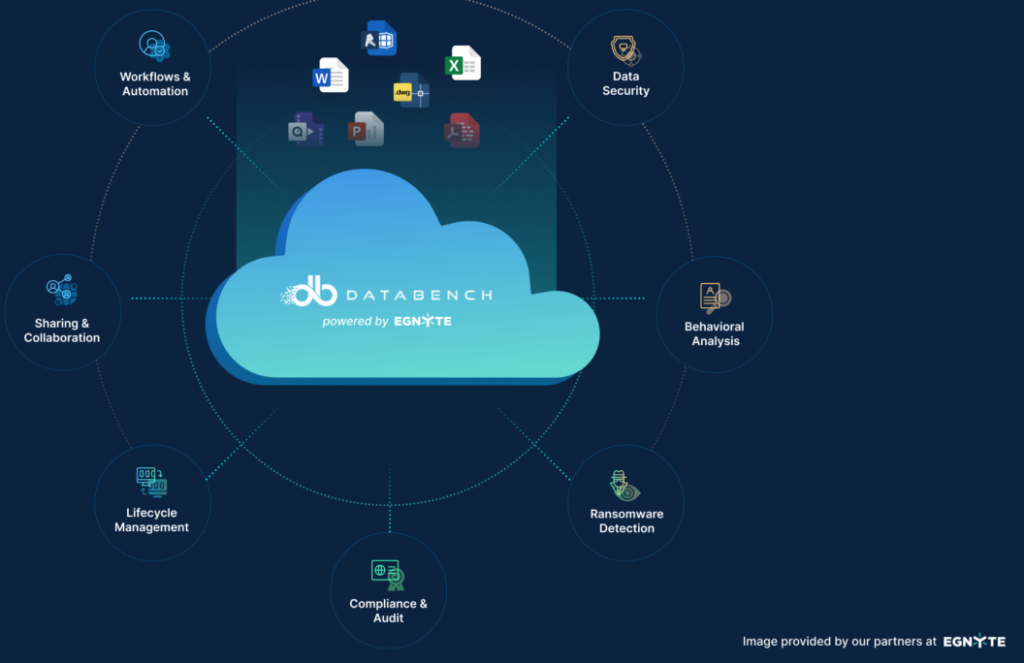

Simplify data collection with DataBench’s mapping tools

DataBench tools can simplify the process of collecting data by automating the mapping and transformation of data from one system or format to another. Our tools help streamline the process of data collection. They reduce the need for manual data entry and reduces the risk of errors.

The DataBench Data mapping tools help to improve the quality of collected data by automating data validation and cleansing rules. This helps to reduce errors and inconsistencies in data. It automates the process of mapping and transforming data, reducing the need for manual data entry and increasing the speed and efficiency of data collection.

Our Data mapping tools can reduce the costs associated with data collection. They automate tasks that would otherwise need to be done manually, such as data entry and data validation. These tools collect data from a wide range of sources, including databases, spreadsheets, and web services. This provides greater flexibility in terms of the data that can be collected.

Data mapping tools help enforce data governance policies by providing centralised control over the data collection process. These tools make the process more efficient and accurate, allowing organisations to make better decisions with the data they collect.